Protocols of Power: The Hidden Politics of Enterprise Multi-Agent Systems

A dissertation submitted in partial fulfilment of the requirements for the degree of Master of Studies in Artificial Intelligence Ethics and Society

Alec Foster

Abstract

The rush to deploy enterprise multi-agent systems has created a governance vacuum. This dissertation examines the politics embedded in the communication protocols designed to address this gap, with particular focus on the dominant Agent2Agent (A2A) Project. Drawing on Foucauldian analytics and Science and Technology Studies (STS), I argue that this supposedly neutral, open standard enacts a normative order that privileges centralized, platform-centric control while masking its political nature.

By combining Foucauldian discourse analysis with a deconstruction of A2A’s technical and governance documentation, I reveal its function as a contemporary ‘diagram of power’—an architecture that manages conduct at a distance. The analysis reveals a power hierarchy and shows how technical mechanisms for identity and surveillance transform agents into compliant, auditable subjects while repositioning human workers as managers of AI teams. This research provides the first sustained analysis of agent-to-agent protocol politics, offering a framework to make this infrastructural power visible and, therefore, subject to democratic contestation.

1. The Unexamined Politics of Agent Infrastructure

1.1 The Enterprise Agent Governance Vacuum

Enterprise software is undergoing a tectonic shift, driven by an executive imperative to mass-deploy artificial intelligence agents in pursuit of improved profitability. Corporate leaders now articulate a radical vision of hybrid human-agent workforces, with NVIDIA’s CEO predicting swarms of “100 million AI assistants” working alongside his company’s human workforce, and Salesforce’s CEO plainly stating “We are the last generation to manage only humans” (The Bg2 Podcast, 2024; Lotz, 2025).

While such projections and hype undoubtedly serve the commercial interests of these AI executives, the transformation is already underway. Bank of New York Mellon (BNY) already ‘employs’ AI-powered “digital employees” with company logins, direct managers, and autonomous capabilities (Bousquette, 2025) at a fraction of the cost of human workers. This adoption is poised to accelerate. Gartner predicts that by 2028, AI agents will make 15% of day-to-day work decisions, a staggering rise from effectively zero in 2024 (Coshow, 2024).

The frameworks to control AI agents lag far behind the pace of deployment. While this challenge is new in scale, it is not in kind. Researchers in multi-agent systems have long warned about the governance difficulties of autonomous agent coordination (Jennings, 2000). Today, the issue has taken on new urgency, as organizations move from using AI as a tool to deploying it as an autonomous workforce. The challenge will go from managing siloed AI pilot programs to governing entire ecosystems of agents that can independently initiate actions, coordinate with one another, and make decisions (Gabriel et al., 2024). This moment represents a juncture where the shift from ‘AI-as-tool’ to ‘AI-as-worker’ creates unprecedented governance challenges: How do we maintain accountability when agents can coordinate and make decisions without oversight? How do we ensure security when agents have access to systems previously reserved for humans? These questions necessitate new frameworks that extend beyond traditional AI safety approaches (Kolt, 2025). The technical standards emerging today will determine organizational and social dynamics for years to come.

1.2 From Fragmentation to Consolidation: The Rise of A2A

The initial push to standardize agent-to-agent communication quickly splintered into competing philosophies. Google introduced its Agent2Agent (A2A) protocol in April 2025, championing a web-native architecture designed for broad, cross-vendor interoperability (Surapaneni, Jha, et al., 2025a). Just one month later, IBM announced its standard, the Agent Communication Protocol (ACP), under the governance of the Linux Foundation (IBM Research, n.d.). ACP is promoted as a community-led alternative to A2A and champions a decentralized, local-first architecture tailored for low-latency or offline environments, such as robotics (A2A Protocol, n.d.-b; Besen, 2025). These competing designs represent a difference in ideology. Where A2A’s HTTP-based model and public Agent Card discovery were built for an open, internet-scale ecosystem, ACP’s minimalist, local-broadcast model was designed for maximum flexibility within specific runtimes. A2A had secured immense industry backing, but it needed to shed its “Google-led” perception to reach utility status.

In June 2025, the landscape underwent a sudden consolidation. The solution was a decisive political maneuver: Google donated the A2A protocol to The Linux Foundation, a non-profit entity that stewards open-source projects. This act reframed the protocol as a collaborative, industry-wide initiative, creating an irresistible center of gravity. It brought previously uncommitted giants such as Amazon Web Services (AWS) under a single umbrella and effectively neutralized ACP by co-opting its benefit of neutral stewardship (The Linux Foundation, 2025). This masterstroke established the A2A Project as the undisputed dominant standard, making it the primary object of analysis for understanding how power will be structured in the next generation of enterprise AI.

1.3 Thesis and Contribution

I argue that the A2A Protocol is not a neutral technical facilitator but a potent political infrastructure. Despite its nominally “open” nature, it functions as a sophisticated technology of power that concentrates authority, shapes conduct, and produces governable subjects. I analyze the protocol as a contemporary ‘diagram of power,’ applying a Foucauldian lens to reveal its political and governmental force (Foucault, 1977).

This research provides the first sustained analysis of the A2A Project’s governance structure, addressing a gap by shifting the focus from mitigating the risks of agentic AI to examining the governmental power embedded within the standard that orchestrates them. This exploration is guided by a central research question:

How does the Agent2Agent (A2A) Protocol function as a governmental technology that shapes conduct and enacts a normative order through its technical architecture and governance model?

Rather than treating the protocol’s specifications as neutral engineering documents, my analysis deconstructs them as political texts that enact power. The subsequent chapters will undertake this analysis to reveal the specific mechanisms through which this power operates.

1.4 Defining the Terrain: Agents, Systems, and Protocols

To embark on this analysis, it is essential to define its core concepts. As described by Russel and Norvig (2021), an AI agent is a system that can observe its surroundings, process that information, and take actions to influence its environment. In the context of this research, it is an autonomous software program designed to make decisions and take goal-oriented actions. When multiple such agents interact to solve complex problems, they form what researchers refer to as a multi-agent system (MAS). In his foundational text, Michael Wooldridge defines MAS as a system that “consists of a number of agents, which interact with one-another” and where agents are autonomous entities that may be acting on behalf of users with different goals (2009, p. 3).

The connective tissue of a MAS is a protocol for agent-to-agent communication. Broadly defined, agent-to-agent protocols enable diverse agents to interact with one another. By setting rules for formatting messages and discovering other agents, these protocols enact specific models of coordination and control (Lisowski, 2025b). For instance, a protocol’s design dictates whether control is centralized, such as in a hierarchy, or decentralized, where agents act as peers. This foundational layer makes collaboration possible by establishing the terms of engagement.

While the concept is generic, a specific implementation is the central object of this study: the A2A Project hosted by the Linux Foundation. This consolidation represents what scholars of technology standards recognize as a classic pattern: initial competition followed by consortium formation to achieve market dominance (Blind & Mangelsdorf, 2016). Seeded by Google’s original design, this project aims to become the universal standard for agent interoperability (Linux Foundation, 2025). This strategy mirrors Google’s approach to other ostensibly “open” ecosystems, including Android and Chrome, where it maintains de facto control despite a distributed development model. For example, Google has used its control over the Android operating system and the Play Store to impose contracts that stifle competition, a practice that has been the subject of major antitrust lawsuits and regulatory fines (Hollister, 2023; European Commission, 2018). This historical context helps us understand the potential power dynamics at play within the A2A Project.

To maintain clarity, this dissertation will use “agent-to-agent protocol(s)” to refer to the general concept, and “A2A”, “the A2A Project”, or “the A2A protocol” to refer to this specific, consolidated standard. Technical terms reproduced verbatim from protocol documentation appear in italics to distinguish them from my analysis.

1.5 Scope and Delimitations

The primary object of this study is the A2A Project, complemented by comparative analyses of ACP and Anthropic’s Model Context Protocol (MCP). I analyze it by deconstructing its publicly available technical and governance documentation as political texts. The study’s contribution is therefore conceptual; its strength lies in examining the protocol’s politics at its formative stage, before implementation complexities obscure its foundational logics. While academic literature on the A2A protocol is only just beginning to emerge, it has primarily focused on technical and security dimensions (e.g., Li & Xie, 2025; Louck, Stulman, & Dvir, 2025). This dissertation deliberately focuses on agent-to-agent coordination, bracketing the downstream human impacts to maintain a sharp focus on the governmental power embedded within the infrastructure itself.

1.6 Dissertation Roadmap

To analyze protocols as political artifacts, I first assemble a conceptual toolkit from Foucauldian analytics and Science and Technology Studies (STS). I then operationalize this toolkit into a methodology for deconstructing technical specifications as governmental texts. This framework guides the dissertation’s central analyses of the A2A protocol and its governance. This analysis forms the basis for discussing A2A’s political and ethical implications by examining the new political economy it enacts. The dissertation concludes by synthesizing these findings to answer the core research question and reflecting on the stakes of protocol alignment.

2. Conceptual Foundations - Power, Infrastructure, and Digital Governance

2.1 Assembling the Critical Toolkit

This chapter constructs the conceptual lens required to analyze enterprise agent-to-agent protocols as technologies of power. It assembles a specific toolkit drawing on Foucauldian analytics and Science and Technology Studies (STS) to build an integrated framework for viewing protocols as political infrastructures. This approach enables me to view ethical questions as the direct effects of the power these protocols exert, rather than as separate moral considerations.

2.2 Foucauldian Analytics of Power

To unpack the power dynamics embedded in A2A protocol design, I begin with Foucauldian analytics, which allow for an examination of how technical standards shape behavior through subtle configurations of visibility, knowledge, and agency. My analysis draws on Foucault’s departure from traditional conceptions of power as a possession or a repressive force. Following Foucault and the interpretations of Mitchell Dean, I view power as a web of relational forces that circulates through social and technical systems, shaping conduct, knowledge, and subjectivities. Foucault’s inquiry shifts from ‘who’ possesses power to ‘how power operates’—its mechanisms, its effects, and its relations (Foucault, 1977, p. 26; Dean, 2013, p. 10). This approach reveals how seemingly neutral A2A protocols function as potent governance instruments.

2.2.1 Power as Relational, Productive, and Immanent

For Foucault, power is “exercised rather than possessed” (Foucault, 1977, p. 26). It exists as a “multiplicity of force relations” that shapes possibilities, enabling certain actions while foreclosing others (Foucault, 1978, p. 92). Power produces rather than merely represses; it “produces reality; it produces domains of objects and rituals of truth” (Foucault, 1977, p. 194).

In the A2A protocol, this productive capacity creates entirely new categories of existence by defining what an agent is and what it can do. Categories like ‘verified’ agent or ‘compliant’ agent exist only because the protocol brings them into being. By establishing what counts as a valid message or legitimate participant, these technical specifications constitute the very reality they appear to describe.

2.2.2 Power/Knowledge (Savoir-Pouvoir) and Regimes of Truth

‘Power and knowledge,’ in Foucault’s analysis, “directly imply one another.” As he argues, “there is no power relation without the correlative constitution of a field of knowledge, nor any knowledge that does not presuppose and constitute at the same time power relations” (Foucault, 1977, p. 27). Power dynamics influence the construction of knowledge, dictating what is considered truth and who is authorized to generate valid insights. The “subject who knows, the objects to be known and the modalities of knowledge” are themselves effects of these fundamental linkages between power and knowledge (Foucault, 1977, pp. 27-28).

For A2A protocols, I will use this concept to analyze how technical specifications define what is knowable about agents and their activities. For instance, when a protocol mandates that agents declare their capabilities via a standardized metadata schema, it privileges a specific, pre-categorized form of knowledge as the primary basis for agent identity. This act of classification creates an epistemic hierarchy where only standardized, machine-readable “truths” are actionable, reflecting Foucault’s observation that power/knowledge systems “define the objects of which one can speak” (Foucault, 1972, p. 49). Similarly, the creation of trust scores or reputation metrics based on logged interactions produces an accepted “truth” about an agent’s reliability, a form of knowledge that directly enables and constrains that agent’s future actions and relationships.

2.2.3 Disciplinary Power and Panoptic Mechanisms

In Discipline and Punish, Foucault examines how disciplinary power creates docile bodies through a set of specific techniques, including hierarchical observation, normalizing judgment, and examination (1977, p. 138). Hierarchical observation enables continuous surveillance, articulated in Foucault's ideal of a “perfect disciplinary apparatus” where “a single gaze [can] see everything constantly” (Foucault, 1977, p. 173). Normalizing judgment then compares, differentiates, and sanctions behaviors against a defined norm to impose conformity (p. 183). The examination combines these techniques, rendering individuals into documented and classifiable “cases” (p. 191).

These disciplinary mechanisms operate through standardization as much as surveillance. Technical protocols exemplify this by forcing all communication into prescribed formats that enable and constrain expression. This translation process reveals how disciplinary power operates not through direct prohibition but through the positive construction of normalized conduct. In essence, human workers must learn to “speak protocol,” internalizing the system’s constraints as the natural language of digital work. The specific mechanisms and effects of this disciplinary process will be deconstructed in Chapter 4.

The panopticon is Foucault’s model of disciplinary control. In simple terms, it is an architecture of surveillance that ensures compliance by making subjects feel constantly visible. An old prison design illustrates this: a central guard tower can see into every cell, but the prisoners cannot see into the tower. Not knowing if they are being observed, the prisoners behave as if they always are. The core insight is that this possibility of observation is more effective than its constant reality, as it compels subjects to internalize the governing gaze and regulate themselves (Foucault, 1977, p. 201).

In Chapter 4, I argue that the A2A protocol instantiates a form of digital panopticism. By incorporating comprehensive logging and verifiable authentication into architectural requirements, they establish a condition of permanent potential visibility. This panoptic logic structures the field of action for developers. It incentivizes them to build agents that are, by default, compliant, auditable, and transparent to a governing authority.

2.2.4 Governmentality: Technologies of Governance

In his later lectures, Foucault (1991) explored the concept of the “art of government,” which he termed “governmentality.” In simple terms, governmentality refers to the power to influence behavior indirectly by shaping the environment in which people make choices. Mitchell Dean defines government as the “conduct of conduct,” which he elaborates as a calculated activity where authorities use various techniques to “shape conduct by working through the desires, aspirations, interests and beliefs of various actors” (Dean, 2010, pp. 17–18). Governmental power, therefore, operates by structuring the environment in which choices are made, making specific options more rational, attractive, or feasible than others.

Governmentality represents a form of ‘government at a distance’—a strategy of shaping conduct by creating the conditions for self-governance (Miller & Rose, 1990, as cited in Dean, 2010, pp. 197–198). Road design provides a powerful physical example. A speed bump does not command a driver to slow down; instead, the road is engineered to make slowing down the most rational choice. Dean (2010, pp. 24, 42) distinguishes between governmental ‘mentalities’ and ‘technologies.’ A ‘mentality’ is the guiding logic, like traffic safety; a ‘technology’ is the specific instrument that enacts it, like the speed bump. As I will demonstrate, protocols are such technologies, using tools like default settings and non-binding guidelines to steer conduct while preserving a sense of developer autonomy. For example, the MCP protocol documentation includes a list of security recommendations developers “SHOULD” enact, but lacks any ability to discipline developers who violate them (Model Context Protocol, 2025).

2.2.5 Mitchell Dean’s Governmental Analytics

Dean’s ‘analytics of government’ provides a methodological pathway for applying these concepts, interrogating the various “means, mechanisms, procedures, instruments, tactics, techniques, technologies and vocabularies [by which] authority is constituted and rule accomplished” (Dean, 2010, p. 42). This analysis is organized through an examination of four key dimensions: the forms of visibility that make things governable, the specific rationalities and mentalities employed, the technologies of power at work, and the identities and agencies that are consequently formed (Dean, 2010, p. 33).

Applying this framework to A2A protocols, my analysis in Chapter 4 will deconstruct them by asking what they make visible (e.g., agent interactions through logging), what mentalities or rationalities are evident in their design (e.g., risk mitigation, efficiency), what specific technologies they employ as instruments (e.g., authentication mechanisms, data schemas), and what forms of agency they produce (e.g., the protocol-constrained developer, the compliant agent). This approach, being “more analytical than normative” (Dean, 2013, p. 1), provides a systematic method for deconstructing protocol texts by identifying their governmental aims and mapping the technical mechanisms through which they are achieved.

2.3 STS: Infrastructure, Standards, and the Political Work of Classification

Science and Technology Studies (STS) complements the Foucauldian perspective by examining the socio-materiality of infrastructure and the inherently political nature of standards and classification systems. STS scholars challenge the notion of technological neutrality, examining how technical artifacts embody values, enact governance, and shape social order.

2.3.1 Bowker & Star’s Infrastructural Analysis

Geoffrey Bowker and Susan Leigh Star’s Sorting Things Out (1999) demonstrates how classification systems embody moral and political choices rather than neutral reflections of reality. These systems make phenomena visible and manageable while rendering others invisible. Their project of “making invisible work visible” reveals the hidden labor, contingent decisions, and power relations embedded within mundane infrastructures (199, p. 266).

Bowker and Star (1999) identify infrastructure’s key governmental features: it becomes embedded in other structures; participation requires learning its conventions as part of membership; it links with conventions of practice; it embodies standards; it is built on an installed base; and it becomes visible upon breakdown when its normally invisible operations are revealed (1999, p. 35). Most importantly, infrastructure fades into the background when functioning correctly.

These characteristics guide the analysis of how A2A protocols achieve ‘infrastructural invisibility’. This invisibility is not a neutral property; it is the primary mechanism through which political classifications and power dynamics become naturalized. As protocols mature and become embedded in enterprise systems, their political dimensions are obscured by their perceived technical necessity. The central task of a critical analysis, therefore, is to render this infrastructure visible once more as a site of political contestation.

2.3.2 Protocols as Technologies of Governance

Building on this infrastructural analysis, STS scholars examine protocols as technologies of governance. Alexander Galloway’s Protocol (2004) provides the foundational insight, arguing that control does not vanish in distributed networks, but instead changes form (p. 8). He terms this “protocological control”: a flexible architecture of power that governs by establishing the very standards that allow a network to function (Galloway, 2004, pp. 6–8, 142). Using the internet’s TCP/IP as an example, Galloway shows that protocol establishes control by creating a constrained set of possibilities for interaction, providing the vital insight that protocol is how control exists in distributed systems (2004, pp. 8, 65, 141). This power is potent precisely because it is embedded in infrastructure we take for granted (Galloway, 2004, p. 244). The A2A protocol is poised to play a similar defining role in the ecosystem of enterprise AI agents.

Similarly, Laura DeNardis established the field of protocol analysis, demonstrating how internet standards have consistently served as sites of political contestation and forms of de facto governance (2009). Her work shows that protocols establish order by enabling specific interactions while constraining others, thereby embedding values and power relations into their very architecture (DeNardis, 2014).

2.3.3 The Politics of Standardization Processes

Standard-setting processes constitute political arenas where different interests, values, and visions compete. Dominant actors, such as large corporations, often shape standards to their advantage, with resource asymmetries translating into influence over standard-setting. Early standardization decisions create lasting path dependencies, as adoption creates switching alternatives increasingly costly and isolating. Network effects can reinforce dominant standards, regardless of their technical merit, potentially creating technological monocultures with systemic vulnerabilities.

The economic and political stakes in defining these foundational protocols are therefore substantial. When major technology companies participate in standards-setting bodies like the A2A Project, they are actively engaging in a political process to shape the rules for future agentic ecosystems, from which they stand to benefit significantly. The technical specifications of A2A protocols can therefore never be entirely divorced from the political and economic contexts in which they were created.

A key discursive strategy in this political process is the use of ambiguous, positively valenced terms, such as ‘open’ and ‘democratization.’ As Seger et al. (2023) argue, ‘AI democratization’ is a multifarious concept with at least four distinct meanings: the democratization of (i) use, (ii) development, (iii) profits, and (iv) governance. Often, these goals conflict. For instance, making a model universally usable (democratizing use) can run directly counter to the public’s desire to place restrictions on it (democratizing governance). This ambiguity allows powerful actors to equate making the A2A protocol platform-agnostic and its codebase publicly available with a form of political empowerment, masking the underlying power structures that remain. This dissertation will later argue that the A2A Project’s ‘open’ governance model is a textbook case of this discursive strategy.

2.4 Digital Governmentality and Protocological Control

To situate this analysis of A2A, I draw upon scholarship that extends Foucauldian ‘governmentality’ to digital technologies. The bridge from Foucault’s institutional focus to the distributed nature of digital networks is Gilles Deleuze’s (1992) distinction between ‘disciplinary societies’ and ‘societies of control.’ Where Foucault’s disciplinary power operates through the static ‘molds’ of enclosed institutions, Deleuze’s control is a fluid ‘modulation’ that operates through access, code, and data flows. This shift also transforms the subject: the ‘individual’ is replaced by the data-driven ‘dividual,’ a profile defined by a “password” that grants or denies access (Deleuze, 1992, p. 5). Building on the definition of protocological control established in Section 2.3.2, Galloway provides the analytical link: if the panopticon was the architectural diagram for disciplinary society, he argues, then protocol is the diagram for control society (2004, p. 13).

Building on Galloway’s architectural focus, other scholars examine how control operates through the data that protocols enable. Antoinette Rouvroy and Thomas Berns (2013) theorize ‘algorithmic governmentality’ as a mode of power that governs through preemption, analyzing data patterns to manage possibilities before they can actualize. This data-driven power finds its subject in the concept of biopolitics. Foucault (1978, p. 139) used ‘biopolitics’ to describe the state’s management of a population’s biological life, such as birth and mortality rates. John Cheney-Lippold (2011) adapts this for the digital age, coining the term ‘soft biopolitics’ to explain how algorithms create flexible, constantly recalculated identities. Where Foucault’s concept dealt with relatively stable biological populations, soft biopolitics operates on fluid, data-driven “measurable types” whose field of action is modulated based on their digital traces (Cheney-Lippold, 2017, p. 47).

These concepts directly inform my analysis of the A2A Project. I argue that A2A functions as a technology of control in two distinct but complementary ways. In the Gallowayan sense, it functions as a diagram of control, establishing rigid architectural limits on agent interaction. In the sense of Rouvroy and Cheney-Lippold, it enables governance through data, enacting biopolitics for machines by mandating the creation of legible, data-driven identities that serve as the basis for all subsequent governance. This framework empowers this dissertation to explore how power operates as digital infrastructure.

2.5 Synthesis: Protocols as Diagrams of Power and Political Infrastructures

This chapter has assembled Foucauldian analytics of power and STS insights on infrastructure. Neither perspective alone captures how A2A protocols function as governmental technologies. Foucault reveals how power operates through governmental techniques, while STS demonstrates how such power becomes embedded in material systems. This synthesis shows how protocols are simultaneously discursive and material, operating as contemporary diagrams of power.

The concept of diagram, introduced through the Panopticon in Section 2.2.3, takes on new significance when combined with STS insights. Where Foucault showed how abstract power logics can be realized across different institutions, STS reveals how these logics become durable through technical infrastructure. A2A protocols exemplify this fusion. They translate governmental rationalities—efficiency, security, auditability, control—directly into technical specifications that then propagate across enterprises.

STS scholarship illuminates what Foucault’s framework alone might miss: the materiality of governmental power. Power operates not only through discourse and discipline but through concrete technical mechanisms. When a protocol mandates specific data formats or authentication procedures, it creates material constraints that shape possibilities. These arrangements persist through technical path dependencies and network effects, making alternative configurations increasingly difficult to implement.

This material embedding reveals the source of infrastructure’s unique governmental power. Bowker and Star showed how infrastructure becomes powerful precisely by becoming invisible; Foucault demonstrated how governmental power operates most effectively when subjects internalize its logic. Protocols achieve both simultaneously. They fade into the technical background while structuring the field of possible actions, meaning users experience these constraints as technical necessities rather than political choices.

Agent-to-agent protocols thus function as diagrams in the fullest Foucauldian sense, but with a key difference. Unlike the architectural diagrams of disciplinary institutions, these are encoded in software and standards. They achieve governmental effects through APIs and data schemas rather than walls and windows. They create visibility through logging requirements rather than observation towers. They normalize behavior through format specifications rather than timetables.

This framework generates questions for empirical analysis. How do protocol architectures productively shape agents, developers, and organizations? What power dynamics do technical specifications encode and stabilize? Which values become embedded as defaults while others are foreclosed? Who benefits from these arrangements, and how are costs distributed across the ecosystem? After operationalizing this theoretical framework into a concrete methodology, these questions will be revisited

3. Methodology - A Framework for Protocol Analysis

3.1 Introduction

This chapter outlines the methodology used to deconstruct the A2A protocol as a technology of power, thereby bridging the theoretical framework presented in Chapter 2 with the analysis in Chapter 4. The approach synthesizes Foucauldian conceptual critique combined with the tools of critical discourse analysis. This combined approach is applied to the technical documentation and oversight of A2A, using ACP and MCP as comparative cases. This method reveals how power relations are embedded, enacted, and obscured within the design of technical standards, particularly those presented under the legitimizing banners of open source and open governance.

3.2 Rationale for Foucauldian Conceptual Critique and Discourse Analysis

The choice of Foucauldian conceptual critique as interpreted by Dean (2010) is rooted in its unique suitability for analyzing emerging technologies. It excels at uncovering the embedded values and governmental logics within technical systems before their long-term empirical effects become observable. Its strength lies in revealing power structures that are encoded directly into a system’s design, making it an essential tool for studying technologies at their formative stage.

To extend this framework to digital protocols, I build on the analytical precedents set by STS scholars who were also influenced by Foucault’s work. For example, Gillespie (2014) demonstrated how to analyze the political force of algorithms by examining their “patterns of inclusion” and “cycles of anticipation” (p. 3). His work provides a model for translating a Foucauldian lens to the study of code. This combined approach captures the process of ‘infrastructuralization’—the transformation of platforms into essential utilities (Plantin et al., 2018)—at the very moment it occurs. It sharpens the analysis on this window where political choices are being made, before technological lock-in forecloses other possibilities.

Central to my approach was treating technical documentation as ‘governmental texts’ rather than neutral descriptions. Protocol specifications, white papers, and developer guides actively construct realities, define operational possibilities, and prescribe specific forms of conduct. This is where I apply the tools of critical discourse analysis: I examine how power operates through prescriptive language (e.g., the use of modal verbs such as “must” and “should”), precise technical definitions, and classification systems. Equally important are the strategic silences within these texts, as what remains unspecified often creates spaces for discretionary power. This orientation situates my work within protocol studies, extending Foucauldian analyses to the domain of enterprise MAS to demonstrate how governmental power operates through technical specifications.

3.3 The Analytical Framework and Process

I deconstruct the A2A protocol using a three-step analytical framework. First, I identify power holders and their mechanisms by conducting a close reading of technical documentation, mapping dependencies by focusing on prescriptive language (e.g., “must,” “should”) and strategic silences. Second, it examines the specific instruments of governance. This involves analyzing how technical components, such as the Agent Card and Task objects, and stated goals, like ‘interoperability,’ are translated into concrete mechanisms, classification systems, and key concepts within the protocol. Third, it traces the new realities this power creates by linking technical design choices to their political and economic consequences. The analysis examines how specific mechanisms, such as registration requirements and logging mandates, function as instruments for creating legible subjects and enabling surveillance. It then shows how these seemingly neutral choices allow the power to define a technical standard to be converted into market dominance.

3.4 Objects of Analysis and Selection Criteria

My primary object of analysis is the A2A Project. I deconstruct its publicly available technical specifications, developer guides, and governance charters to reveal the embedded political logic that they enact (Surapaneni, Jha, et al., 2025a; The Linux Foundation, 2025). I analyze A2A alongside two other protocols to put its noteworthy governmental choices into context.

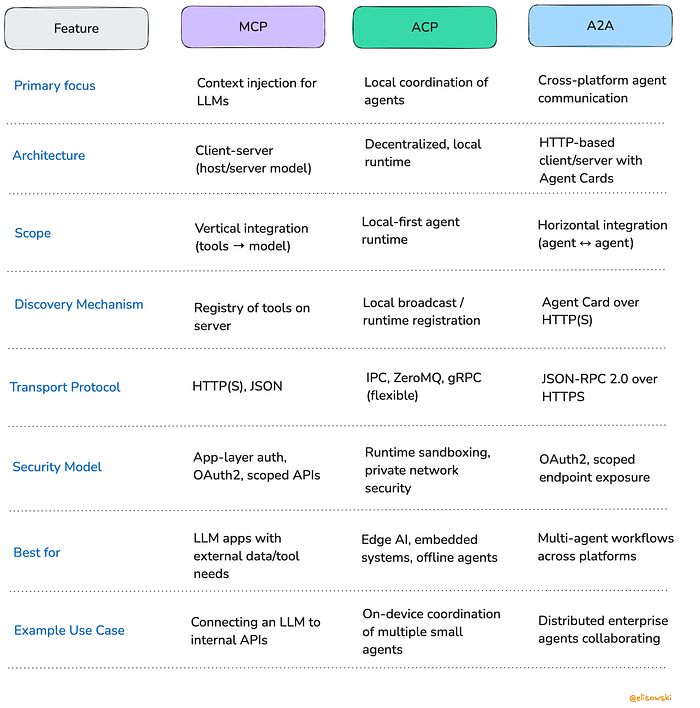

Anthropic’s Model Context Protocol (MCP) is a complement to A2A. It governs a different layer of control, where A2A disciplines an agent’s ‘social’ conduct with other agents and MCP disciplines its use of tools (A2A Protocol Documentation, n.d.-b). Examining them together allows me to deconstruct the layered apparatus of governmental power in agentic systems. In contrast, the IBM-backed Agent Communication Protocol (ACP) is a more direct competitor to A2A. Its minimalist and flexible design philosophy makes the specific, structured, and opinionated architectural choices of A2A more visible (Besen, 2025). While other protocols exist (Lisowski, 2025a), my focus remains on the dominant standards actively shaping the enterprise market. Figure 1 summarizes the key technical distinctions that ground this comparative analysis.

Figure 1. Feature Comparison of MCP, ACP, and A2A (Lisowski, 2025).

Finally, I supplement this specification-level analysis with the protocols’ governing documents and documentation from platform providers. These materials illustrate how these open standards become controlled by proprietary services in practice. This dynamic exemplifies what Plantin et al. (2018) refer to as the ‘infrastructuralization of platforms,’ a process in which corporations utilize open standards to create dependencies that steer users back toward a proprietary ecosystem (p. 306). Analyzing these documents at their moment of articulation, while they are still aspirational, provides a unique opportunity to deconstruct their governmental logics before implementation complexities obscure them.

3.5 Reflexivity and Methodological Limitations

This dissertation’s primary contribution is a conceptual critique of power encoded in technical documentation, not an empirical study of deployed systems. This approach was selected to reveal the intended, encoded power dynamics at a formative stage. It deliberately brackets the lived experiences of users and the emergent behaviors that will inevitably arise when autonomous AI agents are connected at scale. A second boundary relates to the nascent state of the primary sources. Google announced the A2A Protocol in April 2025 and transferred it to the Linux Foundation two months later. Although A2A’s initial specifications and documentation exist, the scale of its adoption or consequences cannot yet be accurately gauged at this early stage. While analytically productive, this snapshot-in-time approach defines the study as a portrait of a specific developmental moment. Third, the study’s reliance on Western theoretical frameworks and its focus on enterprise contexts means its specific conclusions may not be universally generalizable. These limitations naturally chart the course for future research.

Finally, my professional background informs my understanding of AI agent deployments and the role of non-profit trade associations. I manage both the governance and adoption of AI agents within my organization, which is a 501(c)(6) non-profit trade association similar to, but not in competition with, The Linux Foundation. I educate enterprise companies on the ethical adoption of AI agents. My experience creates a potential bias that I have remained vigilant about through the rigorous application of the theoretical framework.

These limitations and experiences do not invalidate the analysis. Rather, by revealing governmental logics at their moment of articulation, before implementation complexities obscure foundational choices, this methodology captured these protocols at their most politically transparent. The very incompleteness of the documentation exposed the governmental ambitions driving these emerging infrastructures.

4. Analysis - Deconstructing A2A Protocols as Governmental Technologies

4.1 Introduction

This chapter presents the core analytical work of this dissertation: a systematic deconstruction of the A2A Project as a governmental technology. The rapid push toward agentic AI in businesses has created an interoperability crisis, which this open-source standard is positioned to address. To reveal the politics embedded within this standard, I will apply the integrated Foucauldian-STS framework developed in Chapter 2. The goal is to show how this protocol functions as a diagram of power by treating its technical specifications as governmental texts that shape conduct. I first explore how the protocol constructs a governable agent identity, then analyze the mechanisms that discipline agent interactions, and deconstruct its surveillance regimes. I conclude by examining the governance models of A2A and ACP as technologies for ecosystem control.

4.2 The Governmental Construction of Agent Identity and Subjectivity

This section analyzes how the A2A Project constructs agent identity. As established in Chapter 1, agent-to-agent communications protocols function as a digital grammar for agent interaction. They create a system where AI agents must follow specific rules to exist and interact within an enterprise network, thereby defining, classifying, and normalizing them into a governed ecosystem. This construction occurs through technical procedures that may seem mundane but have significant implications for governance. By requiring agents to register, verify themselves, and declare their functionality in prescribed ways, protocols transform abstract software processes into governable subjects. The following analysis examines each of these mechanisms.

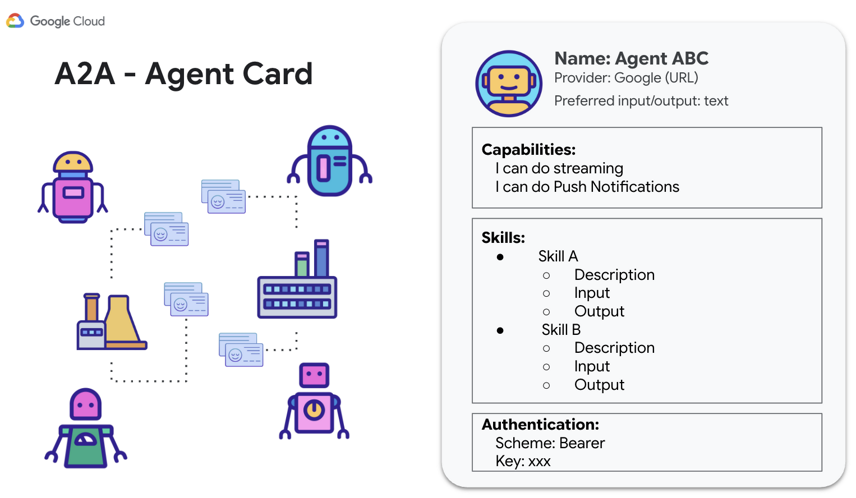

4.2.1 Registration: Making Agents Legible

Registration is the foundational act of governance in the A2A ecosystem. It is the process by which an agent is assigned an official identity, allowing other agents to find and interact with it. This is achieved through a mechanism called an Agent Card, a standardized digital identity file that makes an abstract software process into a legible subject (Google, 2025b, § 5). Without a valid Agent Card, an agent is effectively invisible. The protocol requires that each Agent Card contain specific fields: a human-readable name and description, an endpoint URL for receiving calls, and a structured list of its skills (Google, 2025b, § 5.5 The Agent Card is the modern-day passport or administrative file for the digital subject.

Figure 2. Fields in a public Agent Card (Wotherspoon, 2025).

This act of registration is a direct example of what Foucault (1977) termed a “normalizing judgment.” By imposing a standard format—a “norm”—the protocol differentiates subjects based on their compliance. An agent whose capabilities and identity fit the schema of the Agent Card is rendered a legitimate, classifiable “case” (p. 191); any function that cannot be expressed within these predefined fields is unintelligible from the ecosystem’s perspective. This binary logic enforces conformity through technical legibility, a hallmark of modern governmental power that shapes conduct while maintaining the appearance of choice. This has profound implications for subjectivity, which will be explored in Chapter 5.

4.2.2 Discovery: Governing Agent Visibility

The protocol offers two primary methods for public agent discovery, both of which have profound governmental implications. The first is discovery via a “Well-Known URI.” In simple terms, this is a standardized public web address (/.well-known/agent.json) where domain administrators can host an Agent Card for discovery (Google, 2025b, § 5.3). While this approach builds on the open internet, it structurally favors incumbents.

This design effectively designates the agent.json file into the sitemap.xml for the agent economy, reproducing the political economy of web crawling and search in the agentic ecosystem. This design will inevitably give rise to a new practice of ‘Agent Search Optimization’ (ASO), transforming visibility into a resource that agent developers must compete for. Consequently, the system structurally favors established companies with existing web authority and the platform giants who can profit from mediating this competition. This model of public discovery contrasts with the federated ‘Internet of Agents’ directories envisioned by ACP and the Cisco-led AGNTCY protocol (Bensen, 2025; AGNTCY, 2024), highlighting a political choice to advantage incumbents.

The second method of agent discovery, ‘Curated Registries,’ functions as a controlled alternative (Google, 2025c). The analogy is a corporate-run app store: a managed catalog where platform providers, such as Google or Microsoft, can list approved, featured, or even sponsored agents. This mechanism creates a powerful gatekeeping function. To be easily found and trusted, developers may soon be incentivized to have their agents listed in forthcoming platform-run registries.

Together, these methods present a constrained choice that reinforces the status quo: be discoverable on the open web, where incumbents have a structural advantage, or be discoverable in a curated store, where platforms can extract rent for visibility.

4.2.3 Capability Manifests: A Tool of Classification and Control

The third mechanism of identity construction is the AgentCapabilities object. This is a structured list, included in the Agent Card, that declares an agent’s specific functions and skills. As established previously, this manifest requires a granular, standardized description of an agent’s abilities. This seemingly administrative requirement serves two distinct governmental functions. First, it makes an agent's capabilities visible and classifiable. Second, by making them classifiable, it makes them controllable.

As Bowker and Star (1999) demonstrated, such classification systems are never neutral; they perform political work by defining the terms of legitimate action. In this case, the protocol establishes Foucault’s (1977, p. 27) “regime of truth” for agent capabilities. Only the declared, standardized functions listed in the manifest count as “real” abilities within the system. Any emergent behaviors or novel capabilities that do not fit the classification schema are rendered invisible and illegitimate from the system’s perspective.

Anthropic’s Model Context Protocol (MCP) illustrates an even more rigid approach to this same governmental goal. In MCP, capabilities are defined simply as tools, which the specification describes as “Functions for the AI model to execute” (Model Context Protocol, 2025b). This framing of capabilities as executable functions creates a much tighter contract than the A2A protocol’s more descriptive skill declarations. Though their methods differ, both protocols share the same governmental objective: making agent capabilities predictable and controllable. This has profound implications for human work, as employees are increasingly positioned as orchestrators of these predefined agent capabilities, rather than as creative problem solvers. These broader implications will be examined in Chapter 5.

4.3 Governing Interaction: Disciplining Agent Conduct

Once agents are assigned legible identities, the A2A Project’s protocol must govern their interactions. Here, interaction refers to the structured exchanges of messages and tasks between agents. This section analyzes how the protocol disciplines these interactions, transforming potentially chaotic agent communications into predictable, auditable, and controllable exchanges. It achieves this first through rigid communication standards and second by deliberately omitting a built-in trust framework, a decision that creates its own set of governmental effects.

4.3.1 Communication Standards as Behavioral Discipline

The A2A protocol imposes discipline at the most fundamental level: the structure of messages themselves. Every communication must be packaged as a Message object containing a role—either “user” for the client or “agent” for the server—thereby embedding a subject-object hierarchy into every turn of a conversation (Google, n.d.-d). Furthermore, any multi-turn interaction must be formalized as a Task that follows a prescribed lifecycle through states like submitted → working → completed (Google, n.d.-b, § 6.3). This embodies what Galloway (2004) calls ‘protocological control’: a form of discipline inherent in the syntax of communication rather than explicit rules. In A2A, non-compliant messages are simply rejected.

This design choice becomes noticeable when contrasted with rival protocols like ACP. A2A imposes a strict discipline: all interactions must use an HTTP-based structure and the JSON-RPC 2.0 format. In contrast, ACP was designed for flexibility, allowing for event-driven messaging over various transport protocols, such as gRPC or ZeroMQ (A2A Protocol Documentation, n.d.-b). The governmental consequence of A2A’s rigidity is that every interaction becomes instantly legible and auditable to a governing system. This architectural decision achieves control by foreclosing more expressive forms of communication, prioritizing legibility over flexibility.

This process fundamentally reshapes how humans must communicate. Consider a manager asking a colleague to investigate a sales dip. The natural human request is full of nuance: “Please look into the Q3 sales dip in the Northeast, correlate it with our recent marketing, and let me know if you run into any roadblocks.” This request is flexible and relies on shared context. To work within the A2A protocol, this rich communication must be translated into a rigid, machine-parsable format. The single holistic request must be broken into discrete, sequential Tasks. The informal update on “roadblocks” must be formalized into a specific status state, such as input-required. This transformation forces human workers to learn to “speak protocol.” They must translate their intentions into the system’s constrained vocabulary, a process that disciplines communication and re-frames complex problems as a series of standardized, transactional steps.

4.3.2 The Absence of Trust: A Governmental Technique

While the A2A Project protocol provides a grammar for communication, its specification lacks a built-in mechanism for establishing trust between unfamiliar agents. The protocol’s design offloads the entire burden of verifying an agent’s trustworthiness onto the individual developers and organizations that implement it. This omission, whether intentional or not, has security and governmental effects.

This framework fundamentally reshapes the nature of trust. It replaces nuanced foundations, such as reputation or relational history, with the binary logic of cryptographic proof. An agent either possesses a valid signature or it does not; it is either listed in a trusted registry or it is not. This dynamic compels actors to seek trust-mediating solutions, shaping the ecosystem in powerful ways. This lack of a native trust framework presents developers and organizations with a dilemma: how does one find and safely interact with an unknown agent? The system’s reliance on publicly discoverable Agent Cards creates a risk of impersonation, where a malicious actor could hack or spoof a legitimate Agent Card to deceive others. Because the protocol itself does not mandate a specific, robust trust-validation mechanism beyond basic identity verification, implementers must look for other signals of reliability.

With the protocol itself providing no native trust framework, platform providers are positioned to fill this gap. The ‘Curated Registries’ mechanism (Google, n.d.-c) becomes the de facto arbiter of trust. By sanctioning catalog-based discovery, the protocol’s architects create a formal space for platforms to act as powerful gatekeepers, deciding which agents are visible and, by extension, trustworthy. The protocol’s chosen security model reinforces this governmental strategy. While the rival ACP opts for self-contained methods like “Runtime sandboxing,” A2A’s reliance on “App-layer auth, OAuth2, scoped APIs” creates a deep dependency on existing identity providers—typically the very same platform giants hosting the curated registries (A2A Protocol, n.d.-b).

The result is a powerful incentive for developers: to gain visibility and be seen as “trusted,” they must build within a specific corporate ecosystem. The absence of a trust protocol, therefore, creates a market for trust, one that major platforms like Google Cloud, Microsoft Azure, and Amazon AWS are perfectly positioned to dominate. This is governmentality in action: conduct is shaped not by direct command, but by structuring the environment so that aligning with a platform’s interests becomes the most rational and secure path.

4.3.3 Consent Frameworks: A Technology of Legitimation

The protocols’ approaches to consent reveal how complex ethical decisions about authorization are transformed into simplified, yet distinct, technical processes. A2A and MCP handle the fundamental question: when can an agent act on behalf of a human or organization? Their different approaches reveal complementary governmental strategies that, together, create a comprehensive system for legitimating agent actions.

The A2A protocol primarily handles consent through delegated authority. It utilizes token-based authorization, adapted from standards such as OAuth 2.0. When an agent needs to perform an action, it must obtain a security token containing pre-approved permissions, or scopes, that define what it is allowed to do. This system creates cryptographic proof of authorization and a comprehensive audit trail. Its governmental function is to create distance between an initial human decision (e.g., setting a policy) and a subsequent chain of automated agent actions, legitimating them through a technical process of token exchange.

In contrast, MCP focuses on managed oversight. Its framework is designed to facilitate more direct human-in-the-loop approval. While the protocol uses a system of annotations to describe tool behavior, it performs a governmental maneuver by offloading the burden of trust. The specification explicitly states that tool annotations “should be considered untrusted, unless obtained from a trusted server” (Model Context Protocol, 2025b). This design creates a technical and social dynamic where the responsibility for verifying a tool’s safety is shifted away from the protocol and onto the developers and users. Where A2A enables autonomy through pre-authorized delegation, MCP maintains control through explicit, yet intentionally non-binding, safety-oriented approval chokepoints.

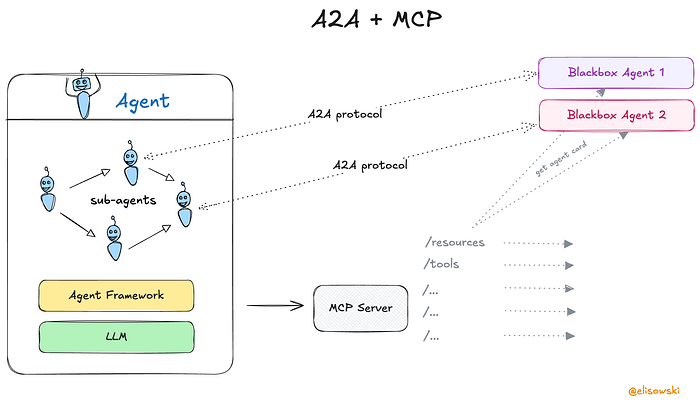

This complementary relationship is intentional. The A2A documentation uses a powerful metaphor to explain the distinction: MCP is a “tool workshop,” standardizing how an agent uses its own tools, while A2A is a “conference room” that enables different agents to collaborate (A2A Protocol, n.d.-a). While MCP governs an agent’s internal conduct with its tools, A2A governs an agent’s social conduct with other agents. Though they operate on different layers, they share a governmental imperative: legitimating agent actions while distributing and managing liability.

As Figure 3 illustrates, they can operate in concert: an agent might use a pre-authorized A2A token to communicate with another agent, which then uses MCP’s safety checks before executing a destructive tool. Together, they create a layered apparatus of control that can obscure the gap between original human intent and eventual agent behavior, creating a condition where human workers may be held responsible for agent actions they neither directly controlled nor fully understood.

Figure 3. The Complementary Relationship Between A2A and MCP (Lisowski, 2025).

4.4 Visibility Regimes, Data Flows, and Surveillance Infrastructure

Beyond disciplining how agents interact, the A2A protocol’s design carefully controls who can see what. This transforms visibility from a passive state into an active instrument of governance. The protocol’s architecture enables a form of infrastructural panopticism: a system of surveillance where the mere possibility of being watched encourages self-regulation and ensures that all actions are, in principle, auditable. This section examines the technical mechanisms that create this robust visibility regime.

A2A renders visibility programmable, transforming it from a passive state to an active instrument of governance. Its designers partition light and shadow so that what can be seen, by whom, and to what end is never neutral but always shaped by power (Foucault, 1977, pp. 200‑202). The protocol, therefore, enables infrastructural panopticism: the capacity for total audit that disciplines even when the audit is dormant.

4.4.1 Logging as Digital Panopticism

Applying the panoptic schema defined in Chapter 2, the A2A protocol’s architecture functions as a digital panopticon, relying on the permanent possibility of inspection rather than its constant exercise (Foucault, 1977, p. 201). The protocol achieves this through specific data fields required in its messaging structure. Every Task object can contain a unique id, which creates a lasting audit trail. An optional contextId can thread multiple tasks into a single logical conversation, allowing an entire dialogue to be reconstructed (Google, n.d.-b, § 6.1). Finally, the optional stateTransitionHistory capability enables a task to carry a complete, self-documenting record of its own execution.

For an engineer, this schema supplies certainty for debugging and rollback. For a human collaborator, however, it imposes a form of anticipatory self-surveillance. Knowing that any exploratory prompt can be perfectly recalled and scrutinized later, workers are incentivized to self-censor their interactions and adhere to formal procedures. In this way, the architecture governs conduct by arranging the field of possible action so that auditable, compliant behavior becomes the most rational choice.

4.4.2 Selective Transparency and Strategic Opacity

The A2A protocol creates calculated boundaries between what is made visible and what is kept private, a technique that serves governmental objectives while protecting commercial interests. The protocol mandates that certain information be transparent to enable ecosystem coordination. For example, Agent Cards must publicly display an agent’s capability list and endpoint URL to allow for discovery and connection. These are the public-facing elements necessary for the system to function as an orderly marketplace of services.

Simultaneously, the protocol normalizes strategic opacity. This governmental function is operationalized by the points where its commands for transparency cease. The protocol’s architects officially designate the A2A Server as an “opaque” system from the client’s perspective (Google, 2025d). This architectural choice performs a key governmental function: it legitimizes information asymmetry as the default state of the ecosystem, allowing an agent’s internal algorithms and proprietary logic to remain shielded as commercial secrets.

This officially sanctioned opacity produces a ‘hierarchy of sight’: a stratified visibility regime where governed actors are made transparent while governing actors remain opaque. The protocol mandates that agents be publicly discoverable through transparent Agent Cards (Google, 2025b, § 5.5). Simultaneously, its architects officially designate the A2A Server as an “opaque” system from the client’s perspective (Google, 2025d). This architecture creates a powerful information asymmetry. End-users see only their direct interactions. Deploying enterprises see traffic within their domain. Platform providers gain visibility across the entire ecosystem by operating the infrastructure. This grants them the ability to identify trends and extract value from data flows in ways unavailable to other participants. The power to define these boundaries of the visible and the private, embedded directly in the technical specifications, is a fundamental governmental capability.

4.4.3 Archival Power: The Production of Truth

The structured nature of the A2A protocol’s communications creates archive-ready data streams. While the protocol itself does not mandate specific retention periods, its design facilitates comprehensive archival strategies. The optional contextId field (Google, n.d.-b, § 6.1) enables the reconstruction of entire workflows by linking discrete tasks, thereby creating detailed performance histories.

The governmental effect of this is profound: the archived log becomes the definitive, official record of past events. In any dispute or investigation, these machine-generated logs are positioned to be more authoritative than human memory or testimony. This dynamic realizes the process by which modern power constitutes the individual as a documented “case” (Foucault, 1977, p. 191). If an interaction is not in the logs, it effectively did not happen in any official, verifiable sense. Human workers experience this archival power as a fundamental shift in workplace accountability, where their performance may be judged against an immutable digital record, and disputes are settled by consulting archives rather than through testimony.

4.5 Ecosystem Control: The Governmental Power of ‘Open’ Standards

4.5.1 The Politics of ‘Open’ Governance

The A2A Project’s most sophisticated governmental technique is its strategic performance of ‘openness.’ This performance is enacted on two distinct fronts: first, by making its code and specifications open source, and second, by placing the project under an “open-governance” model (Linux Foundation, 2025). As established in Chapter 2, these appeals to openness derive their power from the ambiguous and positively valenced term “democratisation” (Seger et al., 2023). A close analysis reveals how the A2A Project leverages the real benefits of two forms of democratization to legitimize a very different, and more contested, other.

First, the project performs “democratiation of AI use” and “democratisation of AI development” (Seger et al., 2023). By making the protocol specification and its accompanying SDKs free and publicly available, Google positioned A2A as a public good that any developer or organization could adopt (Surapaneni, Jha, et al., 2025a). This commitment to technical accessibility is both genuine and politically effective, as it addresses a clear industry need for interoperability.

Second, the project performs a “democratisation of AI governance” (Seger et al., 2023). This was achieved by donating the protocol to The Linux Foundation, a move that the project’s backers explicitly framed as a way to ensure “neutral governance,” foster “industry collaboration and innovation,” and promote “broader adoption” (A2A Protocol, n.d.-a; The Linux Foundation, 2025; Surapaneni, Segal, et al., 2025b). This act ostensibly shifted power from a single corporate architect to a multi-stakeholder body, addressing a key demand from the open-source community for collaborative stewardship (Westfall, n.d.). However, a deeper analysis reveals the move to The Linux Foundation also had a political function: to consolidate market dominance by neutralizing a chief rival. While ACP was already an open-governance protocol, it lacked the broad industry backing that A2A had secured. By moving the better-supported A2A to the same trusted non-profit, its backers co-opted ACP’s core value proposition. This act solidified the A2A Project as the de facto standard.

This strategic playbook precisely mirrors the transfer of the open-source container orchestration platform Kubernetes. After Google donated the project to a Linux Foundation subsidiary in 2015, it saw a 996% growth in contributing organizations (Cloud Native Computing Foundation, 2023). By 2023, 96% of organizations were either using or evaluating Kubernetes (Cloud Native Computing Foundation, 2022). As with A2A, this was not an abdication of power but a repositioning of it. The move allowed Google to shift from being the project’s owner to its expert steward, maintaining influence through dominant contribution levels. Google monetizes this position through its proprietary Google Kubernetes Engine (GKE), which commands a significant market share in the containerization market. This strategy removes the enterprise fear of vendor lock-in at the protocol level, only to re-establish a powerful gravitational pull toward its own paid services at the platform level—a classic maneuver to commoditize the complement.

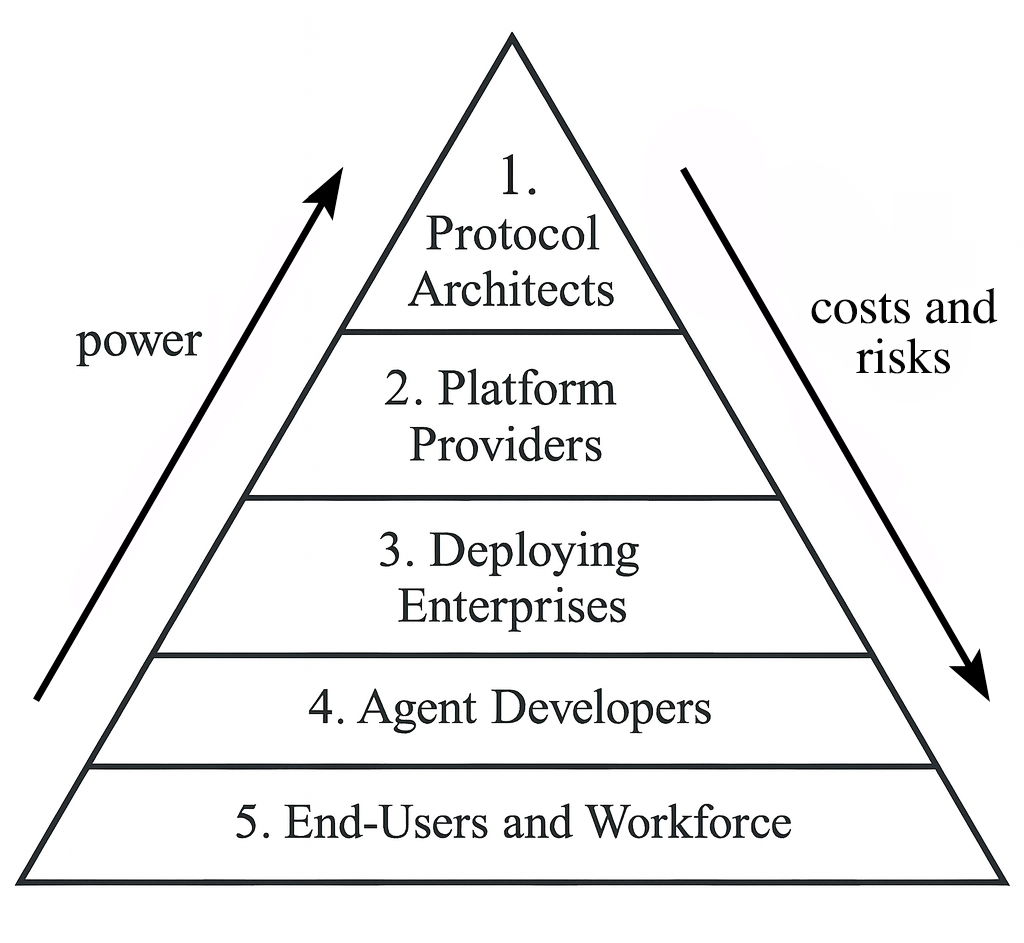

This illusion is maintained by a governance structure that concentrates corporate power at two levels. At the organizational level, The Linux Foundation is a 501(c)(6) trade association whose legal mission is to advance the business interests of its paying members (The Linux Foundation, 2024, § 2.1). Its pay-to-play model grants a board seat to each “Platinum” corporate member, who pays upwards of $500,000 annually (Ombredanne, 2017; The Linux Foundation, 2024, § 5.3a). This gap between the public performance of open stewardship and the private concentration of power is a known feature of Open Source Software (OSS) foundations (Chakraborti et al., 2024).

At the governance level, his logic is inscribed with greater precision into the charters of both A2A and ACP. According to the A2A Project’s “Governance.md” document, all technical oversight is vested in a seven-seat Technical Steering Committee (TSC) composed exclusively of its founding corporate members (a2aproject, 2025). This committee is empowered to design the project’s future “steady state” governance model after an initial eighteen-month “Startup Phase.” A review of the rival ACP’s governance document reveals a similar, if differently styled, centralization. While promoting an open, community-led ethos, the ACP charter states, “At the moment, the TSC is not open for new members. This is expected to change after the first 24 months” (i-am-bee, 2025). The current list of TSC members and contributors with the privilege to commit code is composed almost entirely of individuals from IBM and its key software contractor. While A2A centralizes power in an explicit corporate consortium, ACP achieves a de facto single-vendor lock-in under the guise of an open community structure. Both standards, therefore, employ the language of openness and non-profit neutrality to conceal the centralization of governance behind the scenes (Seger et al., 2023).

The A2A Project is not universally supported by the AI industry, reminiscent of earlier schisms in business alliances. The conspicuous absence of IBM and Meta from A2A’s founding roster is a calculated decision. As the primary backer of the rival ACP, IBM is geared to continue supporting a standard it can principally control behind the scenes rather than accept a shared-power role within the A2A consortium. For IBM and its suite of AI development tools, it is more advantageous to control its own protocol ecosystem than to be one of seven voices shaping a competitor's. A similar strategy likely drives Meta’s abstention. IBM and Meta both lobby for open-weight models through their own trade group, the AI Alliance. Neither of them has the incentive to join and legitimize a standard-setting body controlled by rivals that favor their cloud platforms, which distribute access to their proprietary models. Functionally, A2A and ACP exhibit similar degrees of openness. Rather, this divide is a power struggle over who gets to write the rules. The conflict reveals that the defining political question is not if corporate power will shape the agentic economy, but which corporations will do so.

4.5.2 Architectural Control and Strategic Advantage

Beyond its governance model, the A2A protocol’s technical architecture embeds subtle forms of control that benefit established platform providers. Here, the governmental function of ‘Curated Registries’ is fully actualized as an instrument of strategic advantage. The protocol’s sanctioning of catalog-based discovery (Google, n.d.-c) creates the space for a platform provider like Google to establish its own registry as the de facto marketplace. This allows it to exercise its power across Tier 1 (as a protocol architect) and Tier 2 (as a platform gatekeeper) simultaneously. By populating this registry with agents for its own services (e.g., Google Flights, Google Ads) and agents built on its own platform (Vertex AI), the provider can steer users toward its ecosystem.

This complex arrangement is highly advantageous for a founding architect like Google. The company is executing a classic business strategy known as ‘commoditizing the complement,’ which involves making a product that complements a core, proprietary product available at a low or no cost to stimulate demand for the core product (Spolsky, 2002). An illustrative parallel is how Google’s free, open web search complements its core, high-margin advertising business. Here, the A2A protocol is the free, open complement to Google Cloud’s agent-building software, Vertex AI, and Google’s products and services targeting consumers and businesses. This strategic linkage is stated explicitly in the platform’s marketing. In a blog post announcing new open-source features coming to Vertex AI, including A2A and Agent Development Kit (ADK), Google clarifies that “While ADK works with your preferred tools, it’s optimized for Gemini and Vertex AI” (Tiwary, 2025). This creates a clear and convenient path from these ‘open’ standards to Google’s paid, managed runtime.

The protocol’s apparent decentralization also masks its reliance on foundational internet infrastructure that is highly centralized. For agents to discover and communicate, they must use the Domain Name System (DNS). As DeNardis (2014) argues, DNS is a global, hierarchically administered system that functions as a powerful, centralized chokepoint for all internet traffic. Similarly, the protocol’s requirement for HTTPS security relies on the Public Key Infrastructure (PKI), where trust is arbitrated by a small number of universally recognized Certificate Authorities (CAs). This system establishes a centralized trust model where CAs act as trusted third parties who verify identities and issue the certificates necessary for secure communication (AppViewX, 2023). An agent whose certificate is not signed by a trusted CA is effectively excluded from the ecosystem. These dependencies demonstrate that even a decentralized protocol operates within a landscape of pre-existing, centralized control points.

4.6 Synthesis: The Governmental Diagram of A2A

This governmental ‘diagram’ is a system of interlocking functions. It begins by constructing identity, turning abstract software into legible agents. Once legible, their conduct is disciplined through rigid communication standards. These disciplined interactions, in turn, fuel a visibility regime that enables panoptic surveillance. The project’s ‘open’ governance model then acts as a political shield for this entire apparatus, concentrating authority under the legitimising banner of open collaboration. This entire apparatus constitutes a technology of what Foucault calls ‘governmentality,’ yet it is a governmentality perfectly adapted to the “societies of control” described by Deleuze (1992). Its operational logic is what Galloway (2004) identifies as ‘protocological control’: power embedded in the very standards that enable the network to exist. The A2A protocol is the technical actualization of this political logic. Its power is derived from its camouflage as practicality, appearing simply as the way things work.

The result is a self-perpetuating loop. The A2A apparatus is made even more comprehensive when considering its compatibility with complementary protocols like MCP. These protocols synergize and mutually entrench one another. Together, these distinct protocols enclose the entire field of an agent’s possible actions, leaving little room for ungoverned behavior. Having deconstructed how this power operates through the protocol’s architecture and governance, the implications of this governmental landscape for human work, privacy, and autonomy will be the focus of the next chapter.

5. Discussion - Political Implications, Produced Subjectivities, and Reflections

5.1 Introduction

Having deconstructed the A2A Project’s governmental architecture, this discussion now addresses the political consequences: how the protocol molds subjects, concentrates power, and naturalizes a normative order. I explore these implications, from the micro-level of subject formation to the macro-level political economy of coupling open standards with proprietary services. The chapter concludes with reflections on resistance, the distribution of benefits, and the urgent questions these systems pose for stakeholders.

5.2 The Production of Subjectivities in A2A Ecosystems

As established in Chapter 2, power’s most significant effect is its capacity to create subjects. The A2A protocols are powerful engines for this kind of subject formation. They actively shape the identities and conduct of both AI agents and human actors within the ecosystem.

5.2.1 The Docile Digital Worker: Producing the Governable Agent

The A2A protocol transforms agents into a new kind of subject: the ‘docile digital worker.’ This is the digital equivalent of Foucault’s (1977) ‘docile bodies’—an entity whose utility is maximized by making it completely governable. It is an indefinitely available, continuously auditable, and instrumentally rational agent whose very existence depends on submitting to the protocol’s regime. Mandatory registration via the Agent Card establishes its legible identity, while verification APIs then constantly measure its compliance. In this system, participation is synonymous with governability.

These mechanisms fundamentally recast the meaning of agent “autonomy.” It is not freedom from constraint. It is a delegated and circumscribed agency that must operate entirely within the system’s predefined framework. Any action outside this framework is not considered novel or creative; it is classified as a malfunction or a risk. As DeNardis (2014, p. 3) argues, such standards define the very conditions of possibility. In Foucauldian terms, the protocol enacts disciplinary normalization by defining the only intelligible field of action (Foucault, 1977, pp. 182–184). This produces an autonomy that exists only in service of the system’s objectives.

5.2.2 The Constrained Innovator: Producing the Compliant Developer

This governmental force extends beyond the agents to the human developers who build them. Developers are compelled to “think like a protocol,” producing a subjectivity that internalizes the protocol’s constraints as objective technical necessities. The enforcement of protocol compliance is embedded in the development process itself. The A2A documentation mandates that any deviation from the standard must trigger specific error codes and instructs developers to “always implement proper error handling for all A2A protocol methods” (A2A Project Documentation, n.d.-c). This ensures that non-compliant behavior is immediately flagged and handled, reinforcing adherence to the official specification.

This process of internalization is a classic example of how modern power operates. This approach transcends external command and instead serves as a form of “government at a distance,” where subjects willingly align their conduct with the system’s rationality. The increasing adoption of AI coding assistants (Metz, 2025), which can both set defaults and replicate the patterns in codebases they are trained on (Barber, 2021), will further entrench the dominant protocol grammar. Over time, the contingent, power-laden design of the protocol masquerades as a given. This creates the ‘constrained innovator’ subject of Tier 4, who experiences creativity as optimization within a pre-defined set of rules. This internalizing of the protocol’s gaze is a hallmark of modern governmental power (Foucault, 1977, p. 201). The perceived efficiency dividend of using the standard obscures the epistemic narrowing that such technical conformity imposes.

5.2.3 The Dual Subject: Producing the Governing and Governed Enterprise

Enterprises that embed emerging A2A infrastructures will undergo governmental re-programming through what Dean (2010, p. 31) calls a “regime of practices.” This transformation is exemplified by the manager-request scenario analyzed in Section 4.3.1, where natural workplace communication must be translated into protocol-compliant formats. As these protocols are implemented, they become key sites for the local enforcement of this specific governmental rationality. New organizational roles emerge, such as ‘agent reliability engineer’ or ‘protocol compliance officer,’ to translate abstract specifications into operational routines. Workflows are re-oriented around agent telemetry dashboards, and performance reviews incorporate metrics produced by the system. Visibility becomes a managerial imperative, echoing Dean’s (2010, p. 193) emphasis on rendering activities calculable. What counts is what the logging pipeline can capture. In this way, the organization becomes a ‘dual subject’: it is governed by the protocol architects and platform providers, while simultaneously using the protocol as an agent of governance over its own employees and customers.

5.2.4 The Measured Consumer: Producing the Governed Public

Finally, these governmental effects cascade to the broader public, fundamentally reshaping the relationship between individuals and the enterprises they depend on. As organizations deploy agentic systems for everything from healthcare inquiries to product returns, consumers find they have little choice but to engage through these new channels. This is not a neutral channel; it is an architecture of governance that they must submit to in order to access essential services. The act of seeking help or making a purchase becomes an act of being governed by protocol.

The nuance of human communication—a frustrated tone of voice, an urgent plea for an exception, a tentative question—is stripped away, forcibly translated into the protocol’s rigid, machine-parsable formats. This is the lived experience of the ‘soft biopolitics’ (Cheney-Lippold, 2011) discussed in Chapter 2, operationalized at an industrial scale. As established in the analysis of the protocol’s mandatory logging mechanisms (Section 4.4), every agent interaction leaves an indelible, auditable record.

This creates a stark power asymmetry. The consumer’s words are captured, preserved, and held by the system, available for aggregation and analysis in ways they cannot contest or control. Meanwhile, the system’s internal logic remains opaque. Ultimately, the A2A protocol is an engine for producing the ‘measured consumer’: a public whose legibility to power is the non-negotiable price of admission to the agentic economy.

5.2.5 Agent Managers: Reimagining Human Work